Adding test cases to a test scenario

Last updated

Was this helpful?

Last updated

Was this helpful?

In this section, you will learn how to define test cases in a test scenario. You find information regarding the following topics:

Add test cases to a scenario

Assign Testers

Define run dependencies

Define value sets

Define agents

Organize the view

There are different ways to add test cases to a test scenario.

The navigation bar on the left side of an open test scenario allows you to add test cases to a scenario. By clicking on Test Cases, the content switches to a new view where you can specify the test cases to be executed in the given test scenario. The ribbon bar contains an Add button.

A dialogue opens in which you see all test cases in the project. You can either search for a specific test case or use one of your predefined filters. When you select a test case you can choose whether to add it at the beginning or at the end of the test scenario. After adding all desired test cases you can save your scenario.

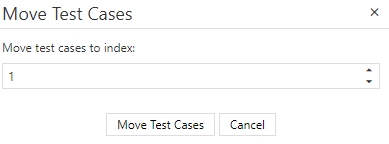

The order of the test cases can be defined by the move to button.

Click it, and select the position by entering the corresponding index.

You can add test cases to a test scenario using your clipboard. To do so, create a new test scenario. Select a test case from the grid and copy it to the clipboard.

Now go back to the test scenario and select 'test cases' in the navigation bar. Click on the arrow below the 'Add' button and select 'Add copied test cases from clipboard' to insert the copied test cases.

Alternatively you can directly create a new Test Scenario or add the given Test Cases to the existing Test Scneario by selecting a set of Test Cases in the element browser of the navigatio window. Then, right-click the selected ones and choose Create new test scenario.

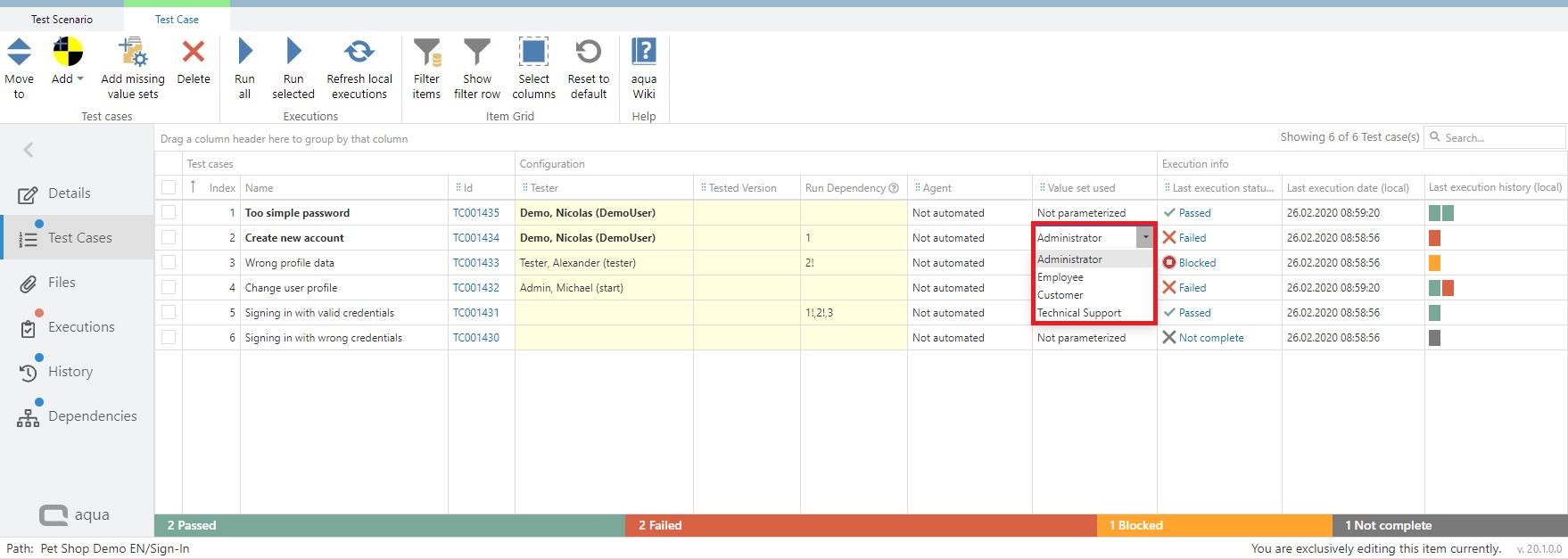

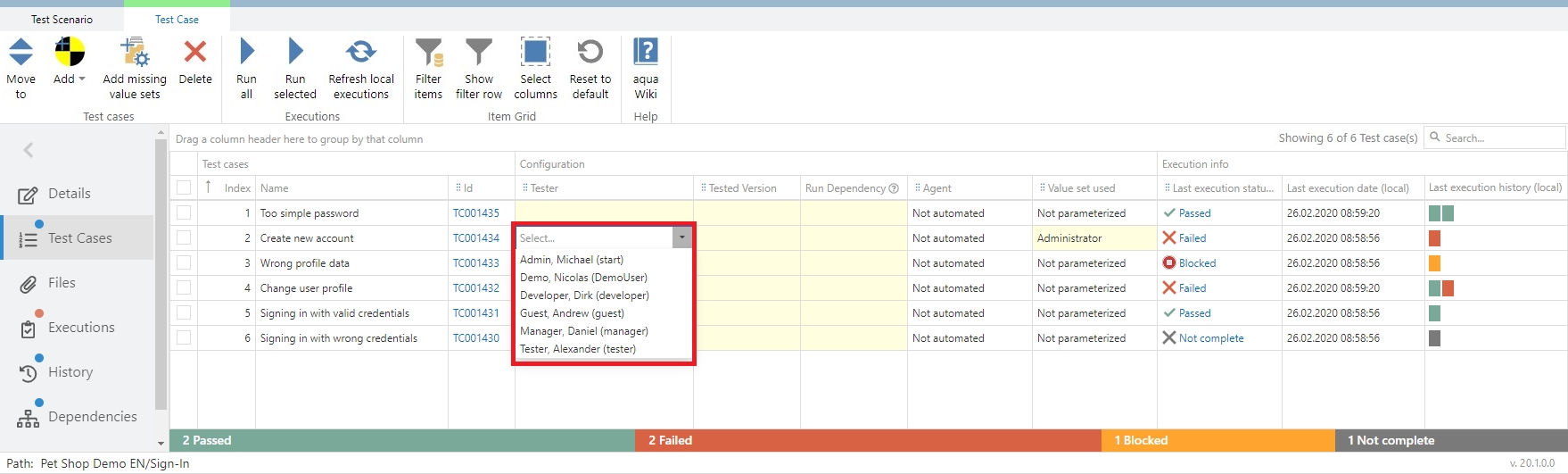

You can assign a tester to each test case in the context of the scenario. Note that you cannot overwrite the assigner from the test case. To assign a tester to a test case in the scenario, click on the empty cell under the 'Tester' column. A drop-down list will appear from which you can select a colleague assigned to the current project. If the selected tester is the current user, the name is displayed in bold.

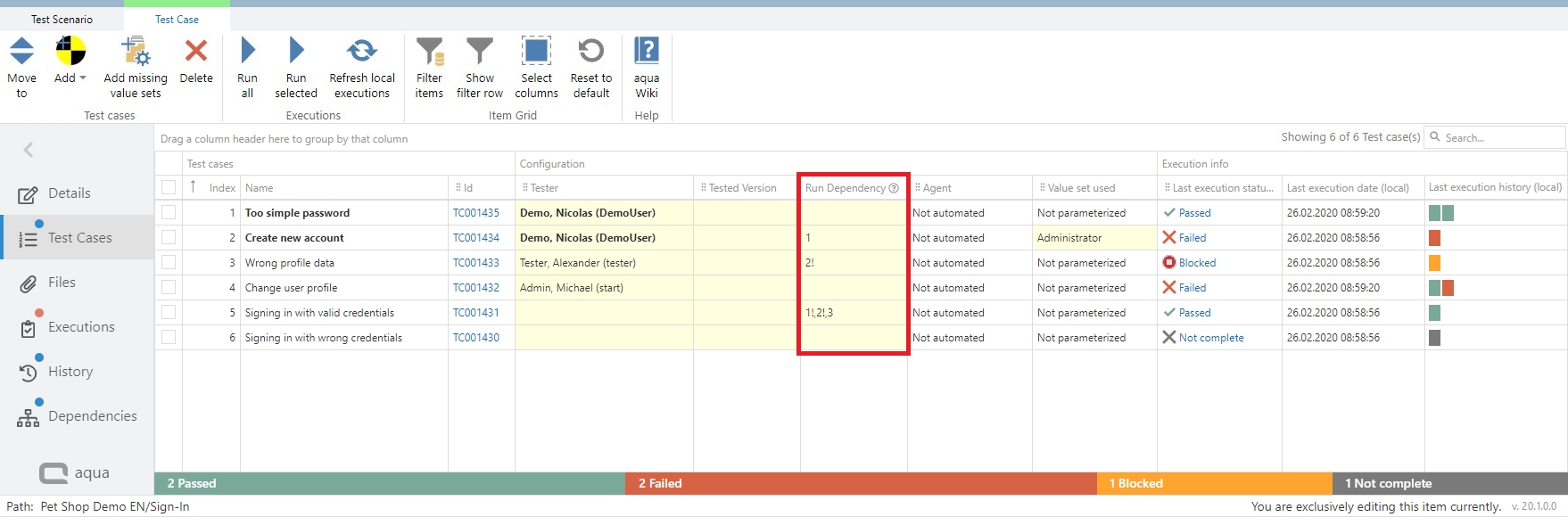

After your test cases are added to the test scenario you can define a run dependency between them. This is useful, for instance, if a test case must pass before the next one can be executed. This means that if a single Test Case fails, none of the other Test Cases should be executed. To do this, add values to the Run Dependency column in the Test Cases view within a test scenario.

There are two types of run dependencies:

Soft dependencies

Hard dependencies.

Soft dependencies ignore the previous test result, e.g. the first Test Case must be executed before the second can be started (it does not matter if the test-case fails).

Hard dependencies take the results into account, e.g. the first Test Case must pass before the second can start. For a soft dependency, you simply specify the index number of the Test Case on which the other Test Case should depend. For a hard dependency, you need to put an exclamation mark after the index number.

When a test case is parametrized, the default value set is automatically entered. You can also select one of the other value sets of the test case in the Value set column.

If your test cases are automated in the test scenario, you can define an agent for execution directly in the test scenario. If you do not define an agent here, the agent will be assigned automatically during execution.

In aqua, you can only select an agent that supports the technology used to automate the test case.

To define agents, click on 'Test Cases'. Here you will find an 'Agent' column. When you click in the cell where you want to define an agent, a drop-down list appears from which you can select the agent. The agent defined in the scenario is always considered first.

Efficiently locate the test cases you need with a customizable view that allows you to sort, group, and filter by any column, just like you're used to from the item grid. For even faster navigation, drag and drop columns to group them by your preference. Additionally, the search bar in the top right corner enables text-based search and filtering across multiple columns, including Name, ID, Tester, Tested Version, Run Dependency, Agent, Value set used, and Last execution status. This comprehensive search functionality empowers you to quickly identify and locate the specific test cases that matter.

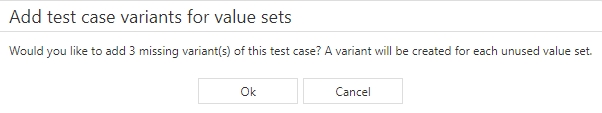

With the button 'Add missing value sets' all other variants of the test case can be added into the test scenario as individual test cases.